2026

TensorSocket: Shared Data Loading for Deep Learning Training

Ties Robroek, Neil Kim Nielsen, Pınar Tözün

International Conference on Management of Data (SIGMOD) 2026

TensorSocket: Shared Data Loading for Deep Learning Training

Ties Robroek, Neil Kim Nielsen, Pınar Tözün

International Conference on Management of Data (SIGMOD) 2026

Training deep learning models is a repetitive and resource-intensive process. Data scientists often train several models before landing on a set of parameters (e.g., hyper-parameter tuning) and model architecture (e.g., neural architecture search), among other things that yield the highest accuracy. The computational efficiency of these training tasks depends highly on how well the training data is supplied to the training process. The repetitive nature of these tasks results in the same data processing pipelines running over and over, exacerbating the need for and costs of computational resources. In this paper, we present TensorSocket to reduce the computational needs of deep learning training by enabling simultaneous training processes to share the same data loader. TensorSocket mitigates CPU-side bottlenecks in cases where the collocated training workloads have high throughput on GPU, but are held back by lower data-loading throughput on CPU. TensorSocket achieves this by reducing redundant computations and data duplication across collocated training processes and leveraging modern GPU-GPU interconnects. While doing so, TensorSocket is able to train and balance differently-sized models and serve multiple batch sizes simultaneously and is hardware- and pipeline-agnostic in nature. Our evaluation shows that TensorSocket enables scenarios that are infeasible without data sharing, increases training throughput by up to 100%, and when utilizing cloud instances, achieves cost savings of 50% by reducing the hardware resource needs on the CPU side. Furthermore, TensorSocket outperforms the state-of-the-art solutions for shared data loading such as CoorDL and Joader; it is easier to deploy and maintain and either achieves higher or matches their throughput while requiring fewer CPU resources.

2025

Modyn: Data-Centric Machine Learning Pipeline Orchestration

Maximilian Böther, Ties Robroek, Viktor Gsteiger, Xianzhe Ma, Pınar Tözün, Ana Klimovic

International Conference on Management of Data (SIGMOD) 2025

Modyn: Data-Centric Machine Learning Pipeline Orchestration

Maximilian Böther, Ties Robroek, Viktor Gsteiger, Xianzhe Ma, Pınar Tözün, Ana Klimovic

International Conference on Management of Data (SIGMOD) 2025

In real-world machine learning (ML) pipelines, datasets are continuously growing. Models must incorporate this new training data to improve generalization and adapt to potential distribution shifts. The cost of model retraining is proportional to how frequently the model is retrained and how much data it is trained on, which makes the naive approach of retraining from scratch each time impractical. We present Modyn, a data-centric end-to-end machine learning platform. Modyn's ML pipeline abstraction enables users to declaratively describe policies for continuously training a model on a growing dataset. Modyn pipelines allow users to apply data selection policies (to reduce the number of data points) and triggering policies (to reduce the number of trainings). Modyn executes and orchestrates these continuous ML training pipelines. The system is open-source and comes with an ecosystem of benchmark datasets, models, and tooling. We formally discuss how to measure the performance of ML pipelines by introducing the concept of composite models, enabling fair comparison of pipelines with different data selection and triggering policies. We empirically analyze how various data selection and triggering policies impact model accuracy, and also show that Modyn enables high throughput training with sample-level data selection.

Towards A Modular End-To-End Machine Learning Benchmarking Framework

Robert Bayer, Ties Robroek, Pınar Tözün

International Workshop on Testing Distributed Internet of Things Systems (TDIS) 2025

Towards A Modular End-To-End Machine Learning Benchmarking Framework

Robert Bayer, Ties Robroek, Pınar Tözün

International Workshop on Testing Distributed Internet of Things Systems (TDIS) 2025

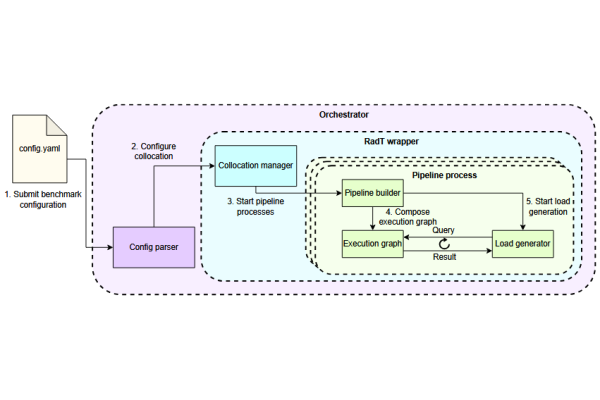

Machine learning (ML) benchmarks are crucial for evaluating the performance, efficiency, and scalability of ML systems, especially as the adoption of complex ML pipelines, such as retrieval-augmented generation (RAG), continues to grow. These pipelines introduce intricate execution graphs that require more advanced benchmarking approaches. Additionally, collocating workloads can improve resource efficiency but may introduce contention challenges that must be carefully managed. Detailed insights into resource utilization are necessary for effective collocation and optimized edge deployments. However, existing benchmarking frameworks often fail to capture these critical aspects. We introduce a modular end-to-end ML benchmarking framework designed to address these gaps. Our framework emphasizes modularity and reusability by enabling reusable pipeline stages, facilitating flexible benchmarking across diverse ML workflows. It supports complex workloads and measures their end-to-end performance. The workloads can be collocated, with the framework providing insights into resource utilization and contention between the concurrent workloads.

2024

An Analysis of Collocation on GPUs for Deep Learning Training

Ties Robroek, Ehsan Yousefzadeh-Asl-Miandoab, Pınar Tözün

European Workshop on Machine Learning and Systems (EuroMLSys) 2024

An Analysis of Collocation on GPUs for Deep Learning Training

Ties Robroek, Ehsan Yousefzadeh-Asl-Miandoab, Pınar Tözün

European Workshop on Machine Learning and Systems (EuroMLSys) 2024

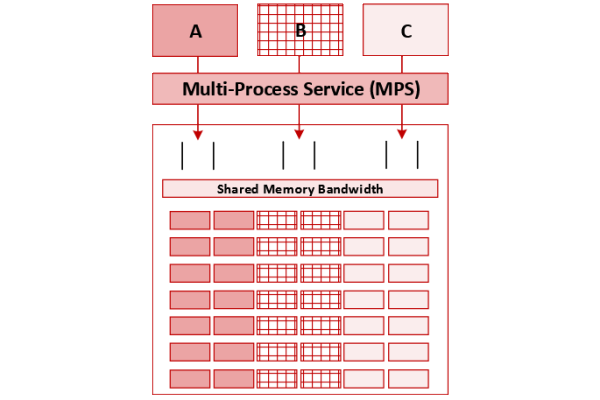

Deep learning training is an expensive process that extensively uses GPUs, but not all model training saturates modern powerful GPUs. Multi-Instance GPU (MIG) is a new technology introduced by NVIDIA that can partition a GPU to better-fit workloads that do not require all the memory and compute resources of a full GPU. In this paper, we examine the performance of a MIG-enabled A100 GPU under deep learning workloads containing various sizes and combinations of models. We contrast the benefits of MIG to older workload collocation methods on GPUs: naïvely submitting multiple processes on the same GPU and utilizing Multi-Process Service (MPS). Our results demonstrate that collocating multiple model training runs may yield significant benefits. In certain cases, it can lead up to four times training throughput despite increased epoch time. On the other hand, the aggregate memory footprint and compute needs of the models trained in parallel must fit the available memory and compute resources of the GPU. MIG can be beneficial thanks to its interference-free partitioning, especially when the sizes of the models align with the MIG partitioning options. MIG's rigid partitioning, however, may create sub-optimal GPU utilization for more dynamic mixed workloads. In general, we recommend MPS as the best performing and most flexible form of collocation for model training for a single user submitting training jobs.

2023

Data Management and Visualization for Benchmarking Deep Learning Training Systems

Ties Robroek, Aaron Duane, Ehsan Yousefzadeh-Asl-Miandoab, Pınar Tözün

International Workshop on Testing Distributed Internet of Things Systems (TDIS) 2023 Best Presentation

Data Management and Visualization for Benchmarking Deep Learning Training Systems

Ties Robroek, Aaron Duane, Ehsan Yousefzadeh-Asl-Miandoab, Pınar Tözün

International Workshop on Testing Distributed Internet of Things Systems (TDIS) 2023 Best Presentation

Evaluating hardware for deep learning is challenging. The models can take days or more to run, the datasets are generally larger than what fits into memory, and the models are sensitive to interference. Scaling this up to a large amount of experiments and keeping track of both software and hardware metrics thus poses real difficulties as these problems are exacerbated by sheer experimental data volume. This paper explores some of the data management and exploration difficulties when working on machine learning systems research. We introduce our solution in the form of an open-source framework built on top of a machine learning lifecycle platform. Additionally, we introduce a web environment for visualizing and exploring experimental data.

Profiling and Monitoring Deep Learning Training Tasks

Ehsan Yousefzadeh-Asl-Miandoab, Ties Robroek, Pınar Tözün

European Workshop on Machine Learning and Systems (EuroMLSys) 2023

Profiling and Monitoring Deep Learning Training Tasks

Ehsan Yousefzadeh-Asl-Miandoab, Ties Robroek, Pınar Tözün

European Workshop on Machine Learning and Systems (EuroMLSys) 2023

The embarrassingly parallel nature of deep learning training tasks makes CPU-GPU co-processors the primary commodity hardware for them. The computing and memory requirements of these tasks, however, do not always align well with the available GPU resources. It is, therefore, important to monitor and profile the behavior of training tasks on co-processors to understand better the requirements of different use cases. In this paper, our goal is to shed more light on the variety of tools for profiling and monitoring deep learning training tasks on server-grade NVIDIA GPUs. In addition to surveying the main characteristics of the tools, we analyze the functional limitations and overheads of each tool by using a both light and heavy training scenario. Our results show that monitoring tools like nvidia-smi and dcgm can be integrated with resource managers for online decision making thanks to their low overheads. On the other hand, one has to be careful about the set of metrics to correctly reason about the GPU utilization. When it comes to profiling, each tool has its time to shine; a framework-based or system-wide GPU profiler can first detect the frequent kernels or bottlenecks, and then, a lower-level GPU profiler can focus on particular kernels at the micro-architectural-level.

2020

Approximate Nearest-Neighbour Fields via Massively-Parallel Propagation-Assisted K-D Trees

Cosmin Eugen Oancea, Ties Robroek, Fabian Gieseke

2020 IEEE International Conference on Big Data (Big Data) 2020

Approximate Nearest-Neighbour Fields via Massively-Parallel Propagation-Assisted K-D Trees

Cosmin Eugen Oancea, Ties Robroek, Fabian Gieseke

2020 IEEE International Conference on Big Data (Big Data) 2020

Nearest neighbour fields accurately and intuitively describe the transformation between two images and have been heavily used in computer vision. Generating such fields, however, is not an easy task due to the induced computational complexity, which quickly grows with the sizes of the images. Modern parallel devices such as graphics processing units depict a viable way of reducing the practical run time of such compute-intensive tasks. In this work, we propose a novel parallel implementation for one of the state-of-the-art methods for the computation of nearest neighbour fields, called p ropagation-assisted k -d trees. The resulting implementation yields valuable computational savings over a corresponding multi-core implementation. Additionally, it is tuned to consume only little additional memory and is, hence, capable of dealing with high-resolution image data, which is vital as image quality standards keep rising.