Postdoctoral Researcher in Resource-Aware Machine Learning

Postdoctoral Researcher in Resource-Aware Machine LearningI am a postdoc at the IT University of Copenhagen working on Resource-Aware Machine Learning. Before joining ITU, I studied at the Radboud University Nijmegen, where I graduated with a Masters degree in Data Science with a traineeship focussed on Massively-Parallel acceleration of Machine Learning (via graphics cards) at the University of Copenhagen.

My research focusses on improving accessibility and sustainability of Deep Learning. Notably, I am interested in how we can make reporting resource consumption a given instead of a nuisance. My current research focusses on improving the efficiency of data loading for training LLMs and convolutional networks. Besides Machine Learning, i am interested in the acceleration of algorithms and graphics via graphics cards. I did my Ph.D. in resource-aware data systems with Pınar Tozun as my supervisor.

As a researcher in RAD (Resource-Aware Data Science) I am part of the which is part of the DASYA (Data-Intensive Systems and Applications) group which is part of the IT University of Copenhagen. I am also a member of the DASYA people and the DSAR (Data, Systems, and Robotics) section.

Action required

Problem: The current root path of this site is "baseurl ("/sipondo") configured in _config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

IT University of CopenhagenPhD Student in Resource-Aware Machine LearningSep. 2021 - Aug. 2024

IT University of CopenhagenPhD Student in Resource-Aware Machine LearningSep. 2021 - Aug. 2024 -

Radboud University NijmegenMS. Data ScienceSep. 2017 - Jun. 2020

Radboud University NijmegenMS. Data ScienceSep. 2017 - Jun. 2020 -

Radboud University NijmegenBSc. Computing ScienceSep. 2013 - Jun. 2017

Radboud University NijmegenBSc. Computing ScienceSep. 2013 - Jun. 2017

Experience

-

IT University of CopenhagenPostdoctoral Researcher in Resource-Aware Machine LearningSep. 2024 - present

IT University of CopenhagenPostdoctoral Researcher in Resource-Aware Machine LearningSep. 2024 - present -

IT University of CopenhagenPhD Student in Resource-Aware Machine LearningSep. 2021 - Aug. 2024

IT University of CopenhagenPhD Student in Resource-Aware Machine LearningSep. 2021 - Aug. 2024 -

HobbiiData ScientistJul. 2020 - Aug. 2021

HobbiiData ScientistJul. 2020 - Aug. 2021 -

A.P. Møller - MærskData Scientist (Student Assistant)May. 2019 - Oct. 2019

A.P. Møller - MærskData Scientist (Student Assistant)May. 2019 - Oct. 2019 -

ForecastData ScientistNov. 2018 - Apr. 2019

ForecastData ScientistNov. 2018 - Apr. 2019

Honors & Awards

-

Best Presentation Award (DEEM 2023)2023

Selected Publications (view all )

TensorSocket: Shared Data Loading for Deep Learning Training

Ties Robroek, Neil Kim Nielsen, Pınar Tözün

International Conference on Management of Data (SIGMOD) 2026

TensorSocket: Shared Data Loading for Deep Learning Training

Ties Robroek, Neil Kim Nielsen, Pınar Tözün

International Conference on Management of Data (SIGMOD) 2026

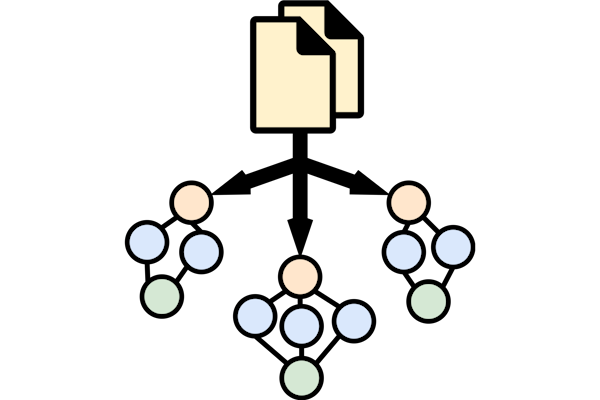

Training deep learning models is a repetitive and resource-intensive process. Data scientists often train several models before landing on a set of parameters (e.g., hyper-parameter tuning) and model architecture (e.g., neural architecture search), among other things that yield the highest accuracy. The computational efficiency of these training tasks depends highly on how well the training data is supplied to the training process. The repetitive nature of these tasks results in the same data processing pipelines running over and over, exacerbating the need for and costs of computational resources. In this paper, we present TensorSocket to reduce the computational needs of deep learning training by enabling simultaneous training processes to share the same data loader. TensorSocket mitigates CPU-side bottlenecks in cases where the collocated training workloads have high throughput on GPU, but are held back by lower data-loading throughput on CPU. TensorSocket achieves this by reducing redundant computations and data duplication across collocated training processes and leveraging modern GPU-GPU interconnects. While doing so, TensorSocket is able to train and balance differently-sized models and serve multiple batch sizes simultaneously and is hardware- and pipeline-agnostic in nature. Our evaluation shows that TensorSocket enables scenarios that are infeasible without data sharing, increases training throughput by up to 100%, and when utilizing cloud instances, achieves cost savings of 50% by reducing the hardware resource needs on the CPU side. Furthermore, TensorSocket outperforms the state-of-the-art solutions for shared data loading such as CoorDL and Joader; it is easier to deploy and maintain and either achieves higher or matches their throughput while requiring fewer CPU resources.

Modyn: Data-Centric Machine Learning Pipeline Orchestration

Maximilian Böther, Ties Robroek, Viktor Gsteiger, Xianzhe Ma, Pınar Tözün, Ana Klimovic

International Conference on Management of Data (SIGMOD) 2025

Modyn: Data-Centric Machine Learning Pipeline Orchestration

Maximilian Böther, Ties Robroek, Viktor Gsteiger, Xianzhe Ma, Pınar Tözün, Ana Klimovic

International Conference on Management of Data (SIGMOD) 2025

In real-world machine learning (ML) pipelines, datasets are continuously growing. Models must incorporate this new training data to improve generalization and adapt to potential distribution shifts. The cost of model retraining is proportional to how frequently the model is retrained and how much data it is trained on, which makes the naive approach of retraining from scratch each time impractical. We present Modyn, a data-centric end-to-end machine learning platform. Modyn's ML pipeline abstraction enables users to declaratively describe policies for continuously training a model on a growing dataset. Modyn pipelines allow users to apply data selection policies (to reduce the number of data points) and triggering policies (to reduce the number of trainings). Modyn executes and orchestrates these continuous ML training pipelines. The system is open-source and comes with an ecosystem of benchmark datasets, models, and tooling. We formally discuss how to measure the performance of ML pipelines by introducing the concept of composite models, enabling fair comparison of pipelines with different data selection and triggering policies. We empirically analyze how various data selection and triggering policies impact model accuracy, and also show that Modyn enables high throughput training with sample-level data selection.